Enfuse for Extended Dynamic Range and Focus Stacking in Microscopy

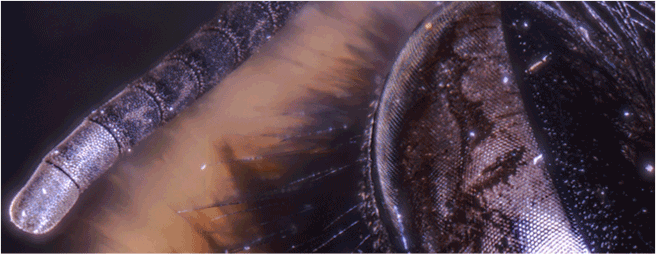

Below is an image of the right eye and antennae of a bee produced from a stack of different photographs combined with Enfuse.

Enfuse is a piece of open source software that is designed for combining multiple images containing different exposures of the same scene into a single image that is well-exposed as possible. This process is called exposure fusion, not to be confused with the popular high dynamic range (HDR) techniques. HDR techniques build a single high dynamic range source image and then apply a tone mapping operator to compress it into a range that a monitor can display or a printer can print. Enfuse skips this step and tries to combine pixels from each source image directly into an output image by assigning each pixel a weighting based on contrast, exposure and saturation calculations in the pixel’s local area. Pixels from each coordinate in the image stack are then combined based on their weightings.

This method of combining pixels means that not only is Enfuse good at combining multiple exposures, but it is good at combining focus stacks — images that are focussed on different parts of the subject. By weighting the decision heavily on local neighborhood contrast rather than saturation and exposure and telling Enfuse to only use the pixel with the highest weighting from the stack (rather than combining pixels in the stack based on weighting) we can perform focus stacking.

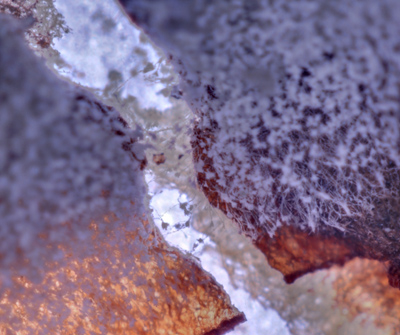

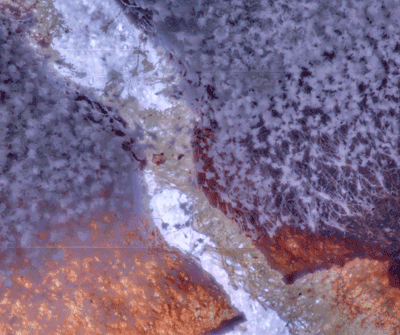

I recently tried Enfuse on images captured directly from a microscope and have shown that it’s very useful when the subject contains both light and dark (and matte and glossy) areas where it’s difficult to illuminate correctly with a single lighting setup. The images below show six different exposures of a piece of mould growing on a coffee cup that was in the PhD lab at the time. There’s a crack in the sample and the light from underneath shines through, making it difficult to see the image detail in that area without underexposing the top.

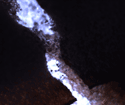

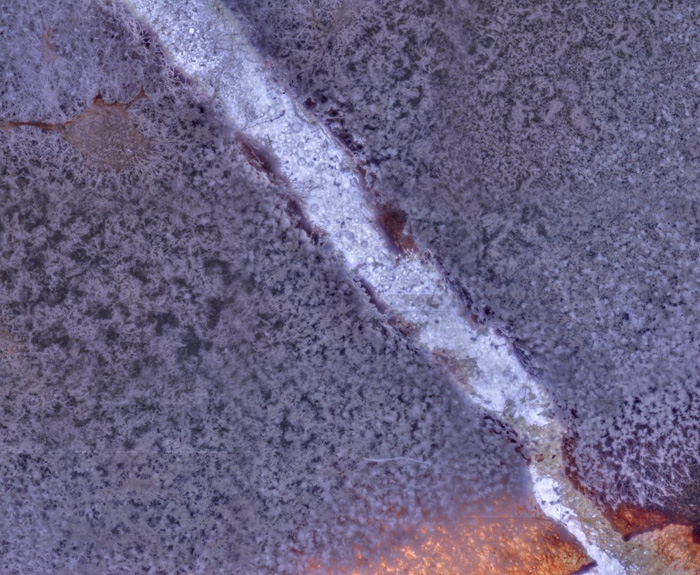

When these are combined in Enfuse we get an output that looks like the one below with no over- or under-exposed areas.

I wrote a Python wrapper for the microscope-mounted camera to capture photos and another Python wrapper for the stage’s RS-232 instruction set and set up an automated process to take six different exposures at a time and then move the stage in the Z axis and repeat. After combining each of the six images into an exposure-fused output and then combining those images in a focus stack we can produce something like this:

Now we’ve shown that focus stacking works nicely on exposure fused images from the microscope, we can expand the program to move the stage around and build up a mosaic as shown below.

With a different subject with a much greater physical depth (more Z-steps) we can produce something that looks like the bee below. This image is a composite of 3600 source images that were automatically combined with Enfuse and then were stitched together into the mosaic manually. The output is something that would never be visible to the human eye due to physical limitations of the lens, and the final composite is around 90 megapixels. The image here is an incredibly reduced version of it. A full resolution version can be found here (warning: 10 megabytes jpg file, 7680x11728px, may crash your browser).